What is QWQ 32B?

In the rapidly evolving landscape of artificial intelligence, a groundbreaking model has emerged, challenging conventional size-performance paradigms: the QWQ 32B.

This AI reasoning model is creating waves due to its remarkable efficiency and ability to perform comparably to significantly larger AI systems. Unlike the trend of scaling up models with ever-increasing parameters, the QWQ 32B stands out for its compact design and resource-conscious approach.

QWQ 32B is a 32 billion parameter AI reasoning model. This means it has the capacity to process and learn from vast amounts of data to deduce and understand complex concepts and relationships. What makes it truly special is its ability to achieve comparable results to models with hundreds of billions of parameters, effectively doing more with less.

This AI model, QWQ 32B, is often compared to a David versus Goliath situation in the AI world, with its 32 billion parameters competing against models with hundreds of billions of parameters. QWQ 32B is turning heads because it’s getting really impressive results even though it’s actually pretty small. This can bring new AI capabilities to a wider range of researchers and developers and make AI more accessible overall.

The key is a combination of innovative design and advanced training methodologies that allow this AI model to extract maximum value from every parameter and every computational resource used during its training. QWQ 32B is a testament to the idea that efficiency and effectiveness are not solely dependent on Scale, but also on smarter approaches to model architecture and training.

The Core Technology: Reinforcement Learning (RL)

At the heart of QWQ 32B's success lies its utilization of reinforcement learning (RL). This approach allows the model to learn through trial and error, optimizing its reasoning abilities based on feedback and rewards. It’s about learning like you would train a pet.

Unlike traditional Supervised learning methods that rely on labeled data, RL empowers the model to explore and discover optimal strategies independently.

In reinforcement learning, the AI model interacts with an environment, taking actions and receiving rewards or penalties based on those actions. Over time, the model learns to associate certain actions with positive outcomes, refining its decision-making process to maximize its cumulative reward. This iterative process allows the model to adapt to complex and dynamic environments, making it well-suited for AI reasoning tasks.

How Reinforcement Learning Works:

- Environment Interaction: The AI model interacts with a virtual environment, similar to a Game or simulation.

- Action Selection: The model selects actions based on its current state and knowledge.

- Reward System: The model receives feedback in the form of rewards or penalties based on the consequences of its actions.

- Policy Optimization: The model adjusts its strategy (policy) to maximize its expected cumulative reward over time.

The developers of QWQ 32B have developed clever recipes for scaling RL. They find the optimal way to reward the model as it’s learning. Reinforcement learning enables AI models like QWQ 32B to continuously refine their reasoning abilities and achieve impressive levels of performance. This leads to the ability to perform math and coding tasks effectively and efficiently.

The utilization of Reinforcement Learning (RL) in QWQ 32B enables it to reach next level stuff in artificial intelligence.

Parameters: The Brain Cells of AI Models

The term "parameters" is often used in the context of AI models, but what does it actually mean? Parameters can be thought of as the brain cells of the AI model.

They represent the model's learned knowledge and influence its decision-making process.

In essence, parameters are the numerical values that are adjusted during the training process to minimize the difference between the model's predictions and the actual outcomes. Each parameter contributes to the model's overall behavior, and the more parameters a model has, the more complex Patterns it can learn.

However, it's important to note that simply increasing the number of parameters does not always lead to better performance. Overly complex models can suffer from overfitting, where they become too specialized to the training data and fail to generalize well to new, unseen data.

The following is a quick comparison of parameter counts in leading AI models:

| AI Model |

Parameter Count |

Description |

| QWQ 32B |

32 Billion |

Designed for efficiency, achieves high performance with a relatively small number of parameters. |

| DeepSeek R1 |

Hundreds of Billions |

High performance due to large parameter count and Mixture of Experts Architecture. |

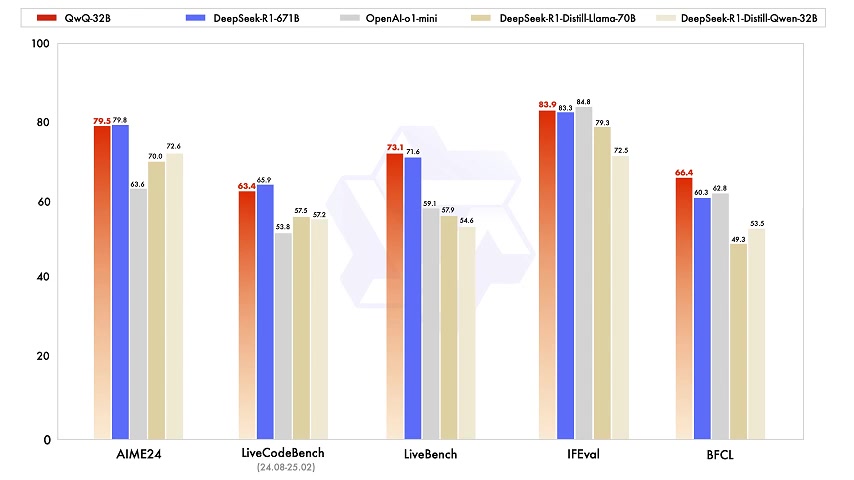

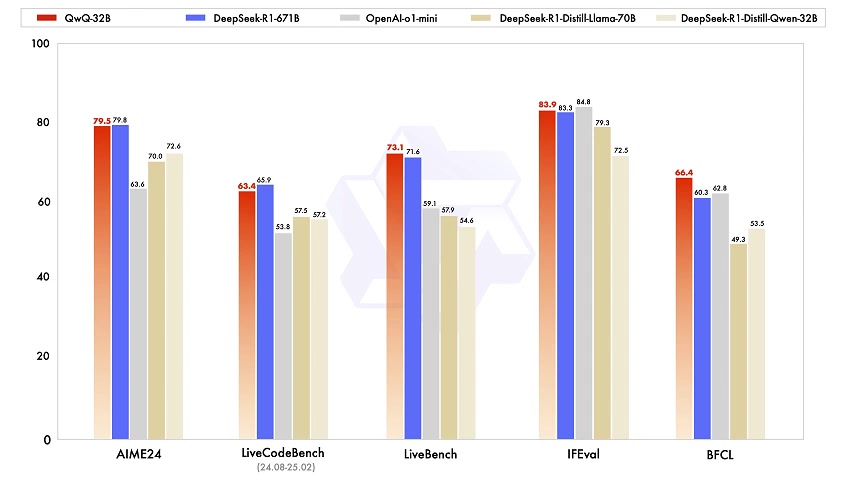

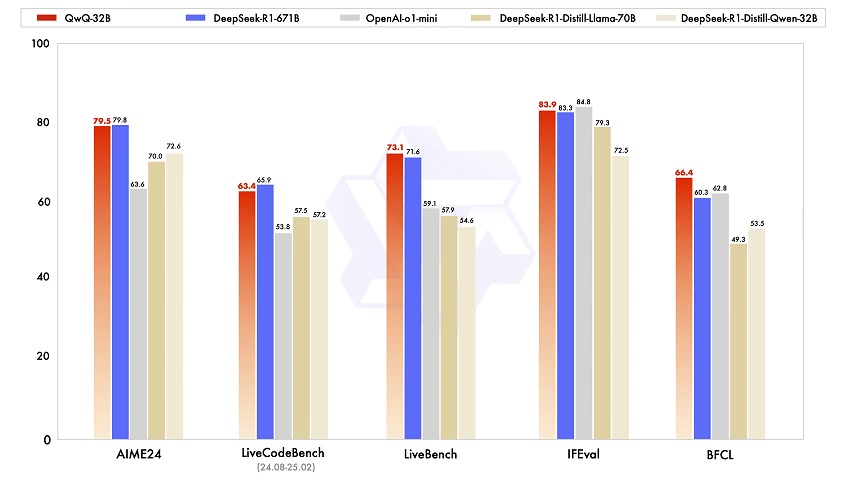

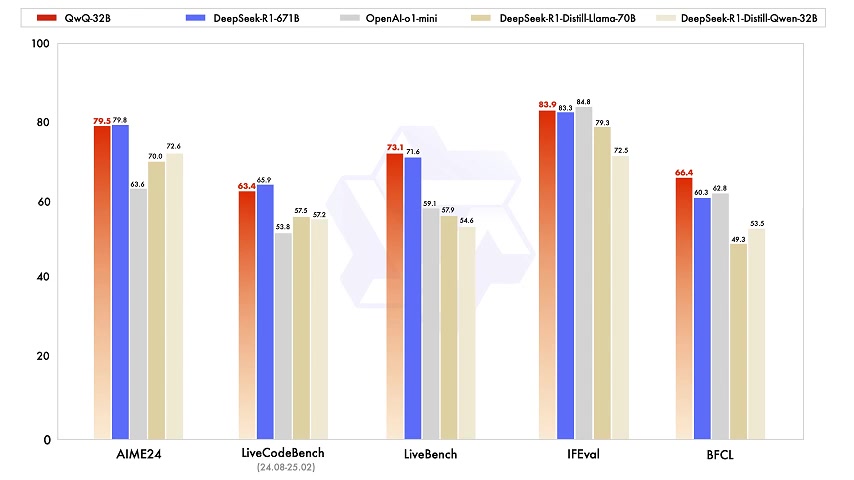

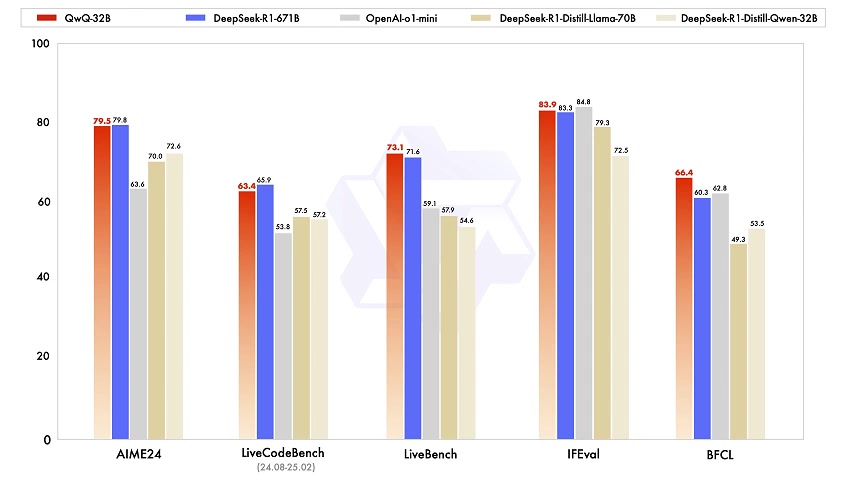

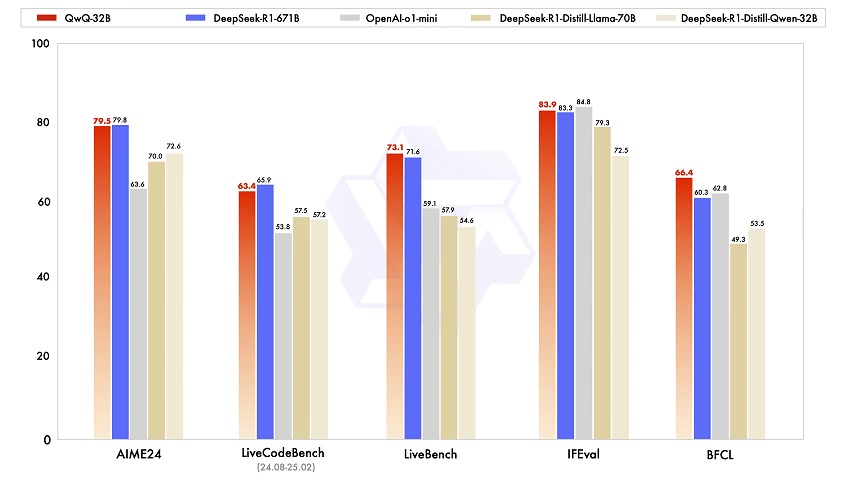

QWQ 32B has an optimal balance of the number of parameters and efficiency. Although QWQ 32B has a significantly smaller number of parameters than deepseek R1, it performs similarly on many AI reasoning tasks.

The ability to achieve competitive results with fewer parameters opens up exciting possibilities for AI deployment on resource-constrained devices and reduces the computational costs associated with training and running large AI models. QWQ 32B and others like it are helping bring Artificial Intelligence to new researchers and other parties.

< 5K

< 5K

0

0

< 5K

< 5K

100%

100%

0

0

884.5K

884.5K

22.78%

22.78%

0

0

< 5K

< 5K

0

0

< 5K

< 5K

2

2

WHY YOU SHOULD CHOOSE TOOLIFY

WHY YOU SHOULD CHOOSE TOOLIFY