AI Secured

5

0 评价

0 收藏

AI Secured 工具信息

什么是AI Secured?

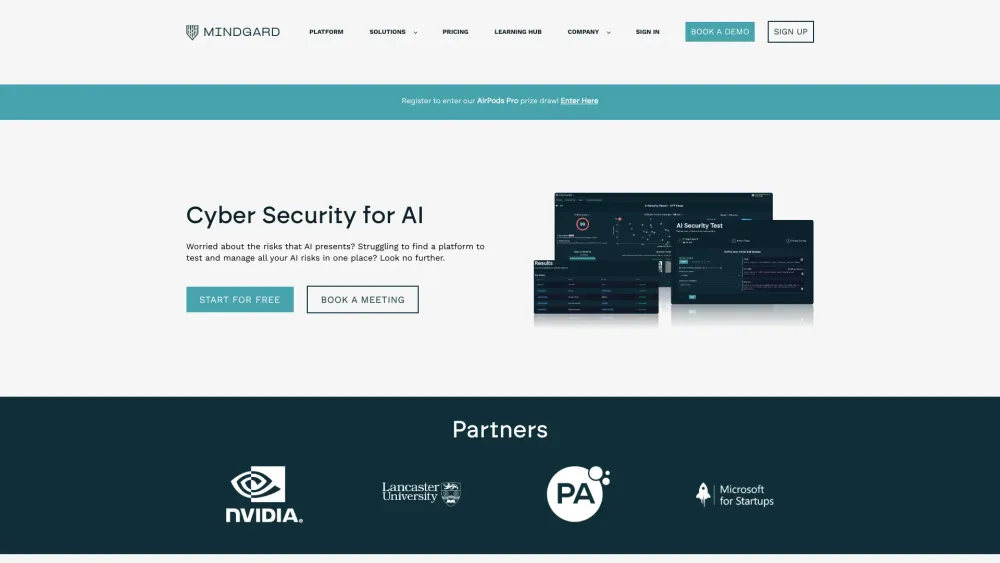

通过自动化的安全测试、修复、威胁检测和市场领先的人工智能威胁库,保护您的AI/ML模型(包括LLM和GenAI)在内部和第三方解决方案中的全生命周期。

如何使用 AI Secured?

在AI安全网站上注册账户。将您的AI/ML模型连接到平台。设置自动化的安全测试和威胁检测。通过市场领先的AI威胁库获取洞察和修复策略。

AI Secured 的核心功能

自动化的安全测试

威胁检测

市场领先的AI威胁库

全生命周期保护

与现有安全生态系统的无缝集成

AI Secured 的使用案例

#1

保护人工智能、GenAI和LLMs

#2

自动化ML系统的AI安全

#3

在整个生命周期中保护AI资产

来自 AI Secured 的常见问题

为什么我要使用AI安全?

AI Secured 支持邮箱 & 客户服务联系 & 退款联系等

更多联系, 访问 the contact us page(https://mindgard.ai/contact-us?hsLang=en)

AI Secured 公司信息

AI Secured 公司名字: Mindgard Ltd .

AI Secured 公司地理位置: Second Floor, 34 Lime Street, London, EC3M 7AT.

更多关于AI Secured, 请访问 the about us page(https://mindgard.ai/about-us?hsLang=en).

AI Secured 登录

AI Secured 登录链接: https://sandbox.mindgard.ai/

AI Secured 注册

AI Secured 注册链接: https://sandbox.mindgard.ai/

AI Secured 价格

AI Secured 价格链接: https://mindgard.ai/pricing?hsLang=en

AI Secured Linkedin

AI Secured Linkedin链接: https://www.linkedin.com/company/mindgard/

AI Secured 评价 (0)

5 满分 5 分

AI Secured数据分析

AI Secured 网站流量分析

AI Secured替代品

社交媒体聆听