Readme

About

An MVP Cog implementation of ostris/ai-toolkit

(Training currently only works with FLUX.1-dev)

How to use

In the TRAIN tab (between README and VERSIONS) you’ll see the parameters that you can select to train a LoRA

For

destination

select/create an empty Replicate model location to store your LoRAs.

(Ex: lucataco/flux-loras)

For

images

upload your zip/tar file of images for training. File names must be their captions, ex: a_bird_in_the_style_of_TOK.png, etc

For

model_name

use “black-forest-labs/FLUX.1-dev”

For

hf_token

use your Huggingface token to access the Flux-Dev weights for training. Make sure the Access Token has the right permissions: “Read access to public gated repos you can access”

For

steps

select a value from 500-4000

The other steps are optional

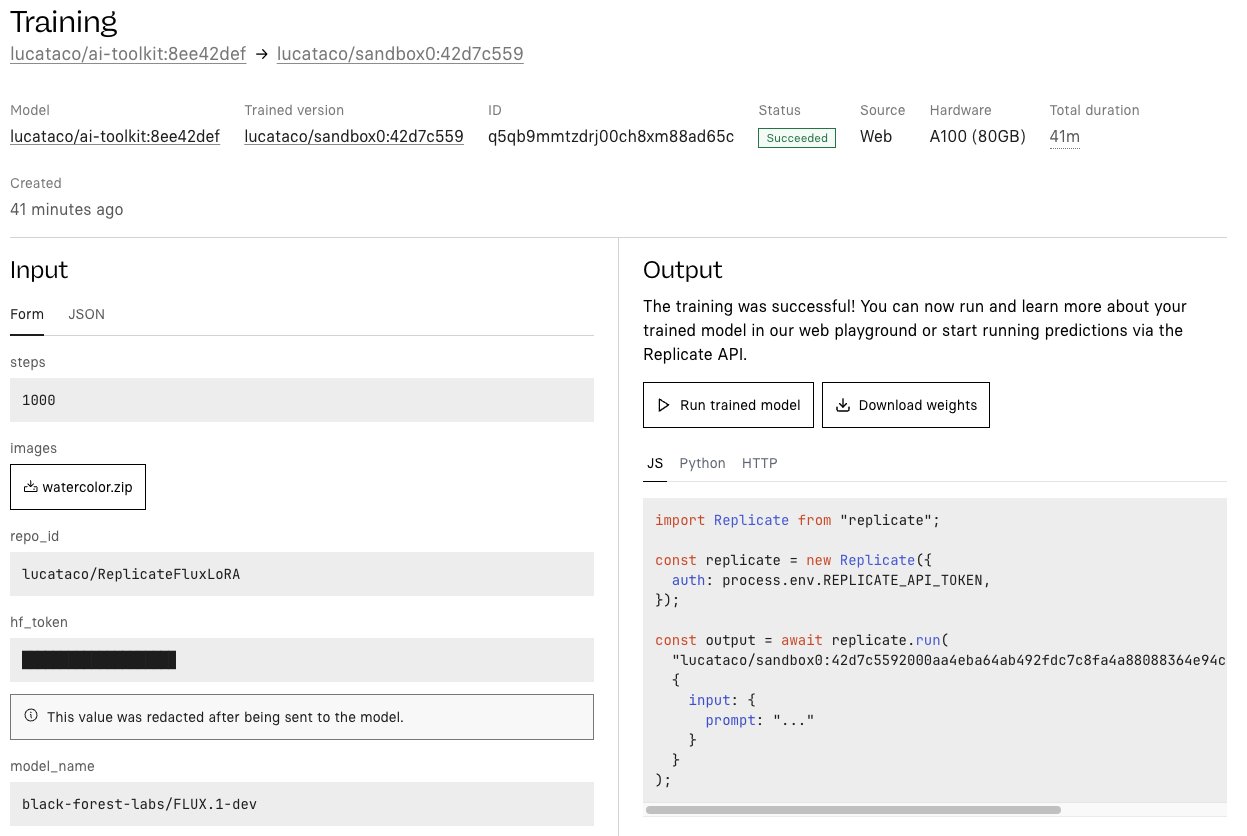

Example Training run:

Below is an example training run to create a style LoRA, trained on 16 watercolor images for 1000 steps:

How to test your LoRA

Once you have an Output.zip file you can download and extract the safetensors file, and upload it to a huggingface space (ex:

lucataco/flux-dev-lora

). If you added a model name for the Train parameter

repo_id

at the bottom, then this should be done for you automatically

With your LoRA in a huggingface model under your repo_id ( lucataco/flux-dev-lora ) go to the LoRA Explorer model and try it out. In this example, I trained a watercolor style LoRA, so to activate the LoRA I would use the prompt: “a boat in the style of TOK”

License

All Flux-Dev LoRAs have the same license as the original base mode for FLUX.1-dev

If you choose the option to auto-upload your trained LoRA to Huggingface, this License will be added for you