wanglab / medsam-vit-base

Model's Last Updated: May 03 2023

mask-generation

Introduction of medsam-vit-base

Runs of wanglab medsam-vit-base on huggingface.co

1.9K

Total runs

0

24-hour runs

165

3-day runs

-71

7-day runs

-3.9K

30-day runs

More Information About medsam-vit-base huggingface.co Model

Url of medsam-vit-base

medsam-vit-base huggingface.co Url

Provider of medsam-vit-base huggingface.co

Other API from wanglab

Total runs:

2.0K

Run Growth:

112

Growth Rate:

5.60%

Total runs:

127

Run Growth:

121

Growth Rate:

95.28%

Total runs:

117

Run Growth:

112

Growth Rate:

95.73%

Total runs:

116

Run Growth:

113

Growth Rate:

97.41%

Total runs:

108

Run Growth:

102

Growth Rate:

94.44%

Total runs:

12

Run Growth:

2

Growth Rate:

16.67%

Total runs:

8

Run Growth:

4

Growth Rate:

50.00%

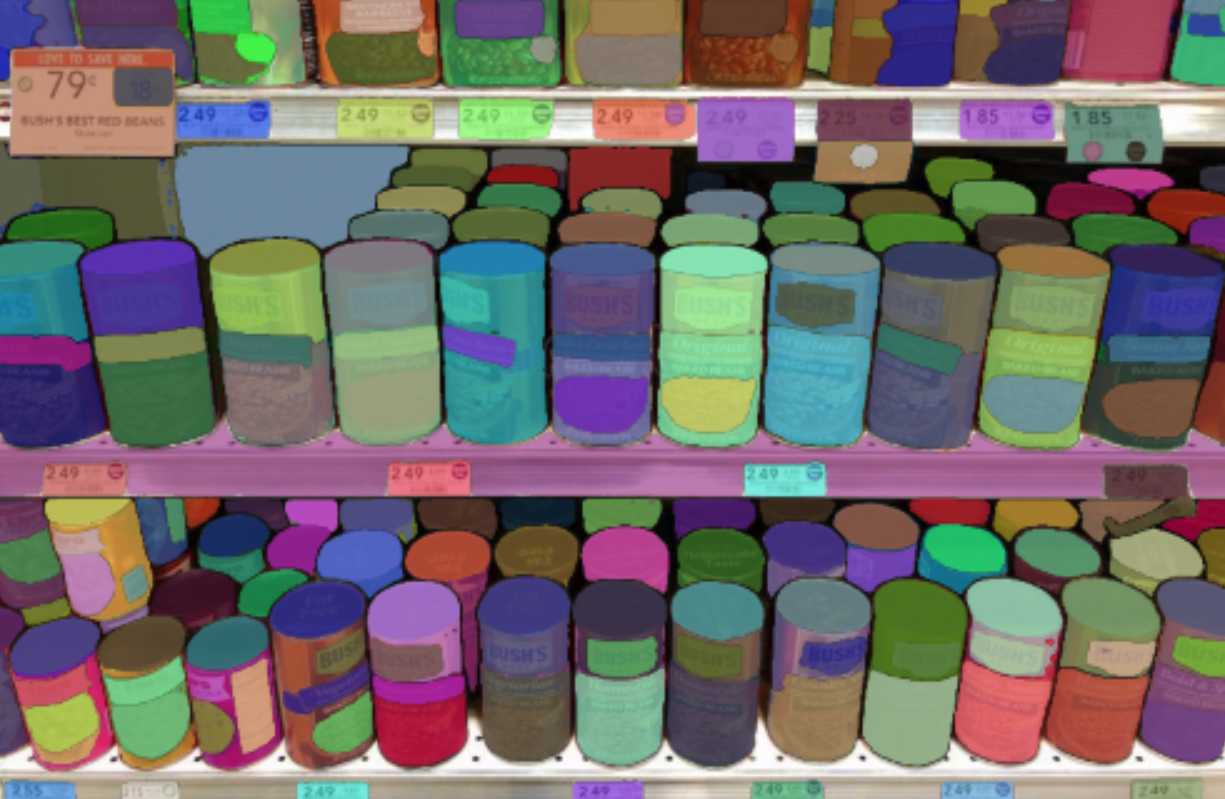

Detailed architecture of Segment Anything Model (SAM).

Detailed architecture of Segment Anything Model (SAM).