LiteLLM

Was ist LiteLLM?

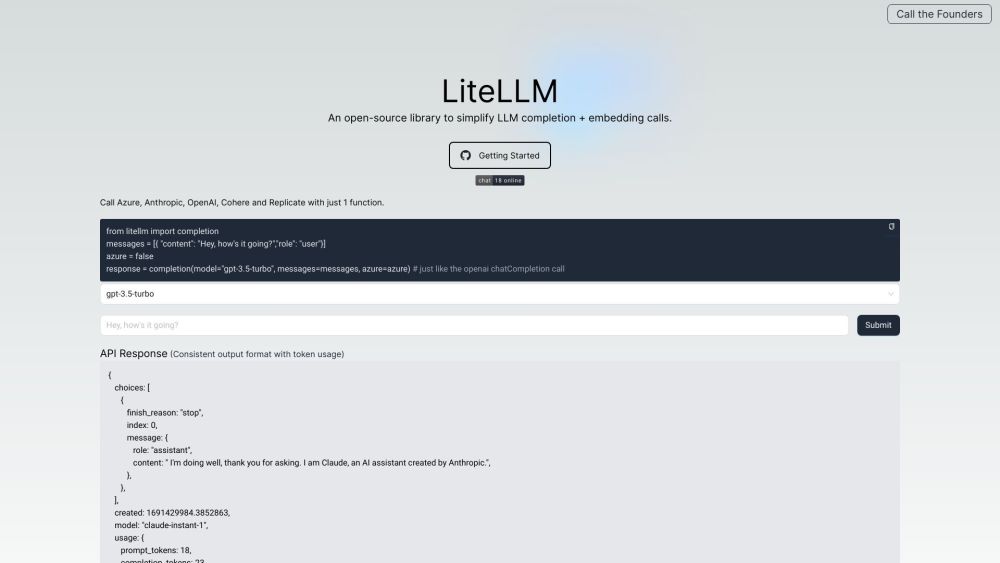

LiteLLM ist eine Open-Source-Bibliothek, die das Ausfüllen von LLM und das Einbetten von Aufrufen vereinfacht. Sie bietet eine bequeme und benutzerfreundliche Schnittstelle zum Aufrufen verschiedener LLM-Modelle.

Wie benutzt man LiteLLM?

Um LiteLLM zu verwenden, müssen Sie die 'litellm'-Bibliothek importieren und die erforderlichen Umgebungsvariablen für die LLM-API-Schlüssel (z. B. OPENAI_API_KEY und COHERE_API_KEY) setzen. Sobald die Umgebungsvariablen festgelegt sind, können Sie eine Python-Funktion erstellen und LLM-Ausfüllaufrufe mit LiteLLM durchführen. LiteLLM ermöglicht es Ihnen, verschiedene LLM-Modelle zu vergleichen, indem es einen Demo-Spielplatz bereitstellt, auf dem Sie Python-Code schreiben und die Ausgaben anzeigen können.

LiteLLM's Hauptmerkmale

Die Kernfunktionen von LiteLLM umfassen vereinfachtes Ausfüllen von LLM und Einbetten von Aufrufen, Unterstützung für mehrere LLM-Modelle (wie GPT-3.5-turbo und Cohere's command-nightly) und einen Demo-Spielplatz zum Vergleichen von LLM-Modellen.

LiteLLM's Anwendungsfälle

LiteLLM kann für verschiedene Aufgaben der natürlichen Sprachverarbeitung verwendet werden, wie z. B. Textgenerierung, Sprachverständnis, Chatbot-Entwicklung und mehr. Es eignet sich sowohl für Forschungszwecke als auch für den Aufbau von Anwendungen, die LLM-Fähigkeiten erfordern.

FAQ von LiteLLM

Welche LLM-Modelle unterstützt LiteLLM?

Kann LiteLLM zu Forschungszwecken verwendet werden?

Hat LiteLLM eine eigene Preisgestaltung?

Was ist der Demo-Spielplatz in LiteLLM?

LiteLLM Discord

Hier ist der LiteLLM Discord: https://discord.com/invite/wuPM9dRgDw. Für weitere Discord-Nachrichten klicken Sie bitte hier(/de/discord/wupm9drgdw).

LiteLLM Github

LiteLLM Github link: https://github.com/BerriAI/litellm

LiteLLM Bewertungen (0)

Analyse von LiteLLM

LiteLLM Website-Traffic-Analyse

LiteLLM Discord-Benutzeranalyse

Latest user counts

Alternative von LiteLLM

LiteLLM Vergleiche

Weitere Inhalte zu LiteLLM

15 Wesentliche Tipps für Effektives LLM-Training im Jahr 2023

Von Genevieve am Mai 22 2024

Master LLM Training: 15 Expertentipps für den Erfolg im Jahr 2023!

Social Media Listening